Prepare training data

You'll estimate global seagrass habitats with a spatial statistics approach that uses a set of locations where seagrass is known to exist and a set of ocean measurements. From this data, the Presence-only Prediction tool—which implements a machine learning technique known as maximum entropy (or Maxent)—will estimate the probability of seagrass at other locations, given the ocean measurements. To perform this analysis, you'll need to clean and prepare the data. First, you will create points representing seagrass presence around the United States coastline. Then, you’ll generate interpolation surfaces representing ocean measurements to serve as predictors for your model.

Download and explore the data

First, you'll download the seagrass data and explore it.

- Download the Seagrass Habitat Prediction ArcGIS Pro project package.

- Double-click the SeagrassPrediction.ppkx file to open the project in ArcGIS Pro.

A global map opens. In the Contents pane, there are four feature classes:

- Global ocean measurements—Ecological Marine Units point data that contains ocean measurements up to a 90-meter water depth.

- USA seagrass—Polygon data for seagrass occurrence. Every polygon in USA seagrass is an identified seagrass habitat.

- USA shallow waters—Shallow bathymetry polygon for the continental United States used as the study area for model training.

- Global shallow waters—Global shallow bathymetry polygon used to predict seagrass globally.

The data layers are in the Equal Earth projected coordinate system, which is appropriate for global analysis.

- In the Contents pane, uncheck the Global ocean measurements layer.

The light blue areas represent the shallow bathymetric zones around the world where the water depth may permit seagrass habitat.

- On the ribbon, click the Map tab, and in the Navigate section, click Bookmarks and click Florida.

The bright green areas are where seagrass habitat has been identified. You will use information about known seagrass presence locations around the continental United States to make predictions about where else around the world seagrass habitat might exist. Since this will be a prediction at a global scale, it will not be as appropriate for identifying seagrass habitats for smaller areas—for example, identifying the locations within a specific bay where seagrass is most likely to be present. Later, you will learn how to repurpose a model for other prediction scenarios.

- In the Contents pane, check the Global ocean measurements layer to turn it back on.

These Global ocean measurements points show Ecological Marine Units (EMU) decadal average, 50-year mean, data values. Most of the data points lie outside of the seagrass observation layer. To develop a good prediction model using the Presence-only Prediction tool, you need many points in known seagrass areas with corresponding ocean measurement data. If you use only the subsample of EMU_Global_90m points within the seagrass polygon, you'll have too few observations.

To solve this problem, you will create a set of random points within the known seagrass habitat to train the model. You will also interpolate surfaces from the Global ocean measurements variables and use the random seagrass habitat points to sample the values of the interpolated measurements. The Global ocean measurement variables are: temp (temperature), salinity, dissO2 (dissolved Oxygen), nitrate, phosphate, silicate, and srtm30 (depth).

You will first dissolve the U.S. seagrass polygons into a single multipart feature and create a set of 5,000 random points within the areas of known seagrass presence.

Create training points

Next, you'll create the training data that the Presence-only Prediction tool will need to model the relationship between seagrass occurrence and ocean conditions. There will be two types of training data: the points representing known locations of seagrass presence and the rasters representing the seven predictor variables (ocean measurements). You'll create the random points within the extent of the USA seagrass polygons. Because you want to create a specific number of random points across the area covered by these habitat areas, you'll dissolve the many polygons in this layer into a single polygon before creating the points.

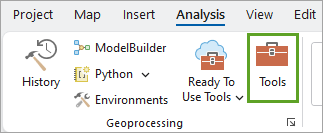

- On the ribbon, click Analysis, and in the Geoprocessing section, click Tools.

- In the Geoprocessing pane, in the search box, type pairwise dissolve.

- In the search results, click the Pairwise Dissolve tool to open it.

- For Input Features, click the drop-down list and click the USA seagrass layer.

- Accept the default Output Feature Class name, USAseagrass_PairwiseDissolve.

- Accept the default values for the other parameters, and verify that the Create multipart features box is checked.

- Click Run.

When the tool completes, the new feature layer, USAseagrass_Dissolve, is added to the map and is listed in the Contents pane. You will be using this version of the seagrass layer, so it’s best to remove the original layer to keep your workspace clean and reduce confusion.

- Right-click the USA seagrass layer and click Remove.

Now you will generate the random seagrass presence location points.

- In the Geoprocessing pane, click the back arrow and in the search box, type create random points.

- In the search results, click the Create Random Points tool.

- For Output Point Feature Class, type USA_Train.

- For Constraining Feature Class, click the drop-down list and choose USAseagrass_PairwiseDissolve.

- For Number of Points [value or field], change the value to 5000.

- Click Run.

The random points appear on the map.

Note:

The colors of the points are randomly generated and may differ from the image.Now you have a new feature class with 5,000 points that fall within the known seagrass habitat around the United States coastline, which you will use in training your presence-only prediction model. At the moment, there are no environmental variables associated with these locations. That information is stored at the locations of the Global ocean measurements points. To address this, you'll create continuous interpolation surfaces for the environmental variables sampled at the Global ocean measurements points.

Interpolate the environmental rasters

The Global ocean measurements feature class contains data from the Ecological Marine Units dataset. This layer has attributes that you need as the prediction variables in presence-only prediction. Some of these variables include salinity, ocean temperature, and nitrate level. You will use the empirical Bayesian kriging (EBK) geostatistical method to interpolate raster surfaces for the environmental values stored in the Global ocean measurements layer. After that, you will sample the values of those rasters at the USA_Train points so the presence-only prediction model will have access to explanatory data at the location of each training point.

- In the Geoprocessing pane, click the back arrow and in the search box, type empirical bayesian kriging.

- Right-click the

Empirical Bayesian Kriging tool in the search results and click Batch.

The Batch Empirical Bayesian Kriging page appears.

- For Choose a batch parameter, click the drop-down list and choose Z value field.

- Leave the other parameters as the default values and click Next.

- For Input features, click the drop-down list and click Global ocean measurements.

- For Batch Z value field, click the Add Many button.

A list of the fields appears.

- Check the boxes for the following seven ocean measurement variables: temp, salinity, dissO2, nitrate, phosphate, silicate, and srtm30, then click Add.

When you click Add, the fields are added to the tool pane.

- Change the Output raster to EBK_%Name%.

This will create a raster with the name EBK_ plus the name of the field, for each of the fields.

- For Output cell size, change the value to 25000.

- For Semivariogram model type, click the drop-down list and choose Linear.

- Expand the Additional Model Parameters section and set the Maximum number of points in each local model to 50.

- Set the Number of simulated semivariograms to 50.

These settings help increase the speed of the EBK prediction by limiting the number of points in each model and the number of simulated semivariograms. Increasing these values may enhance the precision of the predictions but will also increase the tool’s processing time. To better understand these parameters, see the What is empirical Bayesian kriging? help page.

- Expand the Search Neighborhood Parameters section and set the Search neighborhood to Standard Circular and reduce the Min neighbors to 3.

Using a Standard Circular search neighborhood reduces the tool processing time. Limiting the minimum neighbor requirement ensures that values at unknown locations will be estimated even when there are only a few neighbors. Review the Empirical Bayesian Kriging tool documentation for more information about these and other parameters.

- Click Run.

Because this tool will run in batch mode to generate seven separate global interpolation rasters, it will take some time to run (approximately five minutes).

The tool will complete with warnings indicating that NODATA values were ignored for several features. This is not a problem.

Once the Batch Empirical Bayesian Kriging tool completes, each ocean measurement surface is added to the map. They should look similar to the one below, which shows the EBK model for nitrate concentration.

- Click Save Project.

You have prepared data for modeling by generating random points that represent locations of seagrass presence within seagrass habitat around the United States coastline and creating explanatory rasters with empirical Bayesian kriging. Next, you'll use the training data to create a model to predict the presence of seagrass habitats globally.

Predict habitat and refine results

Now that you have prepared your data, you'll use the Presence-only Prediction tool to create a model and make a prediction. Presence-only prediction uses the maximum entropy method (MaxEnt), which is a machine learning approach that is particularly well-suited for species distribution modeling since it can handle scenarios in which absence data is not available.

Perform presence-only prediction

You’ll use the Presence-only Prediction (MaxEnt) geoprocessing tool to train a model to predict seagrass habitat and create a prediction raster showing the probability of seagrass habitat around the world’s coastlines. You will evaluate the model diagnostics and iterate over the modeling process to improve your model.

- In the Geoprocessing pane, click the back arrow and in the search box, type presence.

- In the search results,

click Presence-only Prediction (MaxEnt).

- For Input Point Features, click the drop-down list and click the USA_Train layer.

- For Explanatory Training Rasters, click the Add Many button.

A list of the raster layers appears.

- Check the boxes for the following seven ocean measurement rasters: EBK_dissO2, EBK_nitrate, EBK_phosphate, EBK_salinity, EBK_silicate, EBK_srtm30, and EBK_temp, then click Add.

When you click Add, the rasters are added to the tool pane.

These are all continuous measurement rasters, so the Categorical check boxes are not checked. The tool will also accept training variables that are categorical, for which you would need to check the box.

- For Explanatory Variable Expansions (Basis Functions), check the boxes for:

Original (Linear), Squared (Quadratic), Pairwise interaction (Product), and Smoothed step (Hinge).

Basis functions transform (or expand) the explanatory variables to incorporate more complex relationships between seagrass presence and the variable of interest into the model. Selecting multiple basis functions includes all transformed versions of the variables into the model, from which the best performing variables are selected using regularization. In this case, you select all but the Discrete step option because Smoothed step and Discrete step are relatively similar and selecting only one will save processing time. Review the tool documentation for more information about each basis function.

- For Number of Knots and Study Area, accept the default values of 10 and Convex hull.

Number of Knots is a setting related to the Smoothed step (Hinge) basis function that specifies the number of equal intervals between the minimum and maximum values of the variable, with both forward hinge and reverse hinge transformed variables being created. The Convex hull setting means that the study area will be designated as the convex hull of all input training points. The tool will generate background points, representing potential absence of seagrass, in areas of the study area that do not contain presence points.

-

Check the Apply Spatial Thinning check box. Set the Minimum Nearest Neighbor Distance to 2 and for the units, choose Kilometers. Verify that the Number of Iterations for Thinning is set to 10.

These settings help minimize potential sample bias by removing presence and background points that are within the specified distance of each other so that areas are not spatially over-sampled. The distance between background points is impacted by the spatial resolution of the explanatory rasters, so using a 2-kilometer distance in this case will prevent over-sampling of background areas as compared to the seagrass presence areas. Using multiple iterations for thinning allows the tool to make multiple attempts at the thinning process and select the option that retains the most training points.

- Leave the Output Trained Model File box blank.

You will want to save a model file to share your analysis later, but only after you are sure that the model performs well.

- Expand the Advanced Model Options section, and verify that the Relative Weight of Presence to Background is set to 100, Presence Probability Transformation (Link Function) is C-log-log and the Presence Probability Cutoff is 0.5.

The Relative Weight of Presence to Background value of 100 indicates that it is unknown whether seagrass might be present at the tool-generated background point locations.

It is appropriate to use C-log-log for the Presence Probability Transformation in this scenario because seagrass has minimal ambiguity in terms of location (that is, seagrass has no mobility or migration to take into account). The Presence Probability Cutoff of 0.5 indicates that locations with probabilities greater than 0.5 are classified as present.

- Expand the Training Outputs section, and for Output Trained Features, type trainfeatures1.

This will be an output feature class containing the trained features (presence points and background points, in this case) used to generate the model.

- For Output Response Curve Table, type rc1.

- For Output Sensitivity Table, type sensitivity1.

The Output Response Curve Table and Output Sensitivity Table are helpful for understanding the performance of the model.

- Expand the Prediction Options section,

and for Output Prediction Raster, type seagrass_predict1.

This will be the output raster that will show the model’s predictions of the likelihood of seagrass habitat presence.

- Ensure the Match Explanatory Rasters table contains matching values for the Prediction and Training rasters.

Earlier, you designated the explanatory rasters for training the model on the coastal United States data points and here, you use the same rasters to make a global prediction. In some cases, you might want to make a prediction using different explanatory rasters. For example, you might use the same ocean measurement variables, but with projected values for 50 years in the future, to assess how climate change may impact seagrass habitat and range.

- Leave the Allow Predictions Outside of Data Ranges box checked.

Because you are only using data from the coastal United States to train the model, you will need to allow predictions outside of the data ranges to make worldwide predictions.

Note:

Predicting outside of the data ranges like this can result in less reliable predictions, especially in regions where the values are well outside the training data ranges.

Keep this in mind later when you look at the prediction results for places such as Antarctica, where conditions are very different from the United States coast.

- Expand the Validation Options section,

and for Resampling Scheme, click the drop-down list and choose Random, and accept the default value of 3 for Number of Groups.

These parameters instruct the tool to conduct K-fold cross-validation of the model.

The tool is almost ready to run. You'll add an Environments setting to restrict the area that is processed before running it.

- At the top of the tool pane, click the Environments tab.

- Scroll down, and in the Raster Analysis section, for Mask, click the drop-down list and choose the Global shallow waters layer.

Because seagrass grows in shallow waters, it will save time to restrict the processing to areas to shallow water.

- Click Run.

The tool will take some time (about two minutes) to run.

- In the Contents pane, uncheck the boxes to turn off all the layers except the seagrass_predict1 layer and the basemap.

Note:

You can press the Ctrl key and click a check box to turn multiple layers on or off at the same time. See the documentation for more keyboard shortcuts.

The map shows areas of predicted seagrass habitat, symbolized with darker purple representing the areas with the highest probability of seagrass presence. The prediction may not be as accurate in certain areas, such as Antarctica, where the explanatory variables are outside the range that were used for training.

Evaluate the prediction

After running the prediction, you will evaluate the results and determine whether changes need to be made to your prediction model. After reviewing the model diagnostics and updating the prediction, you will save a model file to share with others who want to replicate or extend your analysis.

Looking at the predicted areas of seagrass habitat, how do you know whether the model you created is valid, or doing a good job of predicting the variable of interest?

In many cases, it is not possible to tell from looking at the prediction result alone. To assess your model, you will need to look at the training data and model diagnostics.

- In the Contents pane, check the box to turn the trainfeatures1 layer on.

- Right-click trainfeatures1 and click Zoom To Layer.

The gray and green points represent background training points created by the tool to gather data on locations where seagrass habitat may or may not exist.

There is a major problem with these data points. The vast majority are over land, which does not make sense for a model that is supposed to predict seagrass habitat. This is a conceptual problem with the model, which highlights the importance of having domain-specific knowledge and understanding each of the tool parameters to ensure the model is correctly specified.

Next, you will check the model diagnostics to see how the model performed.

- At the bottom of the Geoprocessing pane, click View Details.

Note:

You can also access the Details window by opening the History pane, right-clicking Presence-only Prediction (MaxEnt), and clicking View Details.The Details window provides important information about the model you have created and its performance. It also contains any warnings from the tool run. In this case, the warnings are not a problem for your analysis.

- Click Messages, then scroll down to the Model Summary table.

This table shows the model’s omission rate under the given presence probability cutoff (0.5, in this case) and AUC value. The AUC is the Area Under the ROC (Receiver Operating Characteristic) Curve, which measures the performance of the model by comparing the rates of true and false positives. Better model performance is indicated by lower omission rates and AUC values approaching 1.

Note:

There may be small differences in the Omission Rate and AUC values in your results, due to minor EBK interpolation differences depending on your computer's hardware.The model’s AUC (close to 1) is very high, which is promising, but the omission rate (greater than 0.15) is also a bit high. You can also review other information in the Details window to better understand the model, including the regression coefficients and cross-validation summary.

The Cross-Validation Summary table shows that the % Presence - Correctly Classified ranged from 82 percent to 86 percent.

The final aspects of the model you will evaluate are the response curve and sensitivity tables.

- Close the Details window.

- In the Contents pane, scroll down to the Standalone Tables section, and under the rc1 table, in the Charts section, double-click the Partial Response of Continuous Variables chart.

The Partial Response of Continuous Variables chart visualizes the impact of changes in the value of each explanatory variable on presence probability, holding all other variables constant.

- Click the EBK_SALINITY chart.

Clicking the smaller charts gives a better view of their variables in the larger chart on the right. The EBK_SALINITY chart shows that the probability of presence of seagrass habitat peaks sharply in a narrow range of salinity values.

- Close the Partial Response of Continuous Variables chart.

- In the Standalone Tables section, under the sensitivity1 table, in the Charts section, double-click the Omission Rates chart and double-click the ROC Plot chart.

- Click the tabs for the chart panes and drag them to arrange the charts so you can see them together.

These two charts give additional context to the omission rate and AUC diagnostics you looked at earlier.

- On the Omission Rates chart, click and drag a box to select points near the 0.5 cutoff value.

The 0.5 cutoff value is the default value that you used in the model.

You can investigate how changing the presence probability cutoff would impact the classification of background points by clicking and dragging to select points on the Omission Rates chart.

Lowering the cutoff value increases the proportion of background points classified as potential presence.

- Close the charts.

You've reviewed the model results and examined some of the contextual diagnostic data. Now you'll adjust the model to deal with the conceptual problem of having training points on land.

Rerun the model with a better study area

The first run of the model created the set of trained classification points within the convex hull extent of the points in the USA_Train feature layer. While the points are located in shallow water, much of the area between them is occupied by land. Now you'll rerun the model but restrict the placement of trained classification points to areas that are within shallow water.

- Click the Analysis tab, and in the Geoprocessing section, click History.

- In the History pane, double-click the top result, Presence-only Prediction (MaxEnt).

Opening the tool this way opens it with all of the previous parameters still filled in.

Note:

It may take some time to repopulate all of the parameter values in the Geoprocessing pane.You will only change a few of the tool parameters.

- On the Presence-only Prediction (MaxEnt) tool, scroll down to the Study Area parameter, click the drop-down list and click Polygon study area.

After tool validation completes, a new parameter will appear.

- For Study Area Polygon, click the drop-down list and click USA shallow waters.

This will restrict the area of possible seagrass habitat presence and absence test locations to the shallow water coastal areas around the continental United States.

- For Output Trained Model File, type seagrass_model.

After a few moments, the path within your project folder structure will be populated and the .ssm file extension is added to the model name.

You will work with this model file in the next section of the tutorial.

Note:

Typically, you would evaluate the new model to ensure that the results are appropriate before saving the model file, but you will output it now to save time. - Expand the Training Outputs section

and update the output names with a 2 to indicate that this is the second run.

- Output Trained Features: trainfeatures2

- Output Response Curve Table: rc2

- Output Sensitivity Table: sensitivity2

- Expand the Prediction Options section and update the Output Prediction Raster name with a 2 to indicate that this is the second run.

- Output Prediction Raster: seagrass_predict2

- Click Run.

The tool will take some time (about two minutes) to run.

When the tool completes, layers will be added to the Contents pane.

- In the Contents pane, uncheck the boxes to turn off all the layers except the seagrass_predict2 layer and the basemap.

- In the Contents pane, check the box to turn on the trainfeatures2 layer.

- Right-click the

trainfeatures2layer and click Zoom To Layer.

The training features (presence and background locations) are located appropriately in nonland, coastal areas.

- On the Presence-only Prediction (MaxEnt) tool, click View Details.

- Click Messages, then scroll down to the Model Summary table.

Check the Omission Rate and AUC values. Note that the AUC is similar to the previous model but the omission rate is much lower, indicating better model performance.

The Cross-Validation Summary table shows that the % Presence - Correctly Classified ranged from 95 percent to 96 percent.

You could also explore the Sensitivity and Response Curve charts for this new model and compare them to the previous model.

- Close the Details window.

Compare the predictions

Next, you will visually compare the predictions of the two models.

- On the ribbon, click the Map tab, and in the Navigate section, click Bookmarks and click Europe.

- In the Contents pane, turn off the visibility of all layers except seagrass_predict2, seagrass_predict1, and Light Gray Base.

- In the Contents pane, click the seagrass_predict2 layer.

The Raster Layer contextual tab appears on the ribbon. This tab is available when a raster layer is selected in the Contents pane.

- On the ribbon, click the

Raster Layer tab and in the Compare group, click the Swipe tool.

- In the map pane, click and drag the

Swipe tool down across the map.

The Swipe tool interactively hides the selected layer and reveals the layer beneath it. You can use this tool to explore differences between your first and second predictions.

Note the differences around the Baltic Sea. With the initial model, predicted probability of seagrass habitat presence was very low in the Baltic Sea, especially around Copenhagen, Denmark, for example. The predicted probability increased in this region in the second model. Seagrass meadows are important carbon hot spots in the Baltic Sea, particularly so in certain protected bays around Denmark, so this helps increase confidence in the newer model's performance.

You would normally continue to explore the model predictions and compare them to other known seagrass locations outside of U.S. coastal waters, but for the purposes of this tutorial, you are ready to move on to sharing the model.

You predicted seagrass habitat distributions in coastal areas across the globe using MaxEnt, working with the Presence-only Prediction tool iteratively to adjust parameters and ensure that your model was specified appropriately. Next, you'll document the model and share it.

Share your model

Now that the prediction is complete and the results have been evaluated, the next step is to make the modeling itself more transparent and reproducible. The second time you ran the Presence-only Prediction tool, you generated a spatial statistics model (.ssm) file.

You will add variable descriptions and units to this file so the model is fully documented and ready to be shared. Whether or not you plan to share the model file, maintaining a documented model file in your records allows you to revisit previous analysis and understand the expected input variables and their units, and review how the model performed. You may also want to share the model with others, such as colleagues who want to replicate your analysis in their region or build on your work by analyzing a local area with higher-resolution data.

Document the model file

To document the model file, complete the following steps:

- In the Geoprocessing pane, click the back arrow, and in the search box, type describe spatial, then click Describe Spatial Statistics Model File in the results.

- For Input Model File, click the browse button and expand the Project, Folders, SeagrassPrediction, and p30 folders.

- Click the seagrass_model.ssm file

and click OK.

An information message appears, providing basic information about the model, including the model type and variable to predict.

- Click Run.

- When the tool completes, click View Details.

- Expand the Details window and review its contents.

Many details about the model are provided, including the date the model was created, the type of model, predictors and response, and model characteristics and diagnostics, including the AUC and Omission Rate.

Importantly, input locations and values are not disclosed in a model file, so you can share a model even if the input data is sensitive, such as nesting locations for an endangered bird species.

There is no information for the Description and Unit fields for the variable to predict and explanatory training rasters. Without understanding what each variable represents and its units, another user will not be able to make use of this model file. Imagine if a user assumed that temperature was measured in Fahrenheit for this model when it is actually in Celsius—their predictions would be incorrect.

Next, you will fill in this missing information.

- Close the Details window.

- In the Geoprocessing pane, click the back arrow and in the search box, type set spatial statistics, then click Set Spatial Statistics Model File Properties.

- For Input Model File, click the browse button and click the seagrass_model.ssm file, then click OK.

The variable and raster names used in the model are listed. The Description and Unit boxes allow you to add information to the model documentation.

- In the Variable To Predict section, under Presence-Only, for Description, type Seagrass habitat presence.

- In the Variable To Predict section, under Presence-Only, for Unit, type None.

- In the Explanatory Training Rasters section,

fill in each variable Description and Unit as follows:

- For: EBK_DISSO2, Description: Dissolved oxygen, Unit: ml/l

- For: EBK_NITRATE, Description: Nitrates, Unit: μmol/l

- For: EBK_PHOSPHATE, Description: Phosphates, Unit: μmol/l

- For: EBK_SALINITY, Description: Salinity, Unit: None

- For: EBK_SILICATE, Description: Silicates, Unit: μmol/l

- For: EBK_SRTM30, Description: Depth, Unit: Meters

- For: EBK_TEMP, Description: Temperature, Unit: °C

- Scroll back to the top of the tool and click in the Input Model File box.

This should trigger validation of the variables you entered. Sometimes these values are lost if tool validation is not triggered before running the tool.

- Click Run.

- Click View Details.

The tool reports that the fields have been updated.

- Close the Details window.

- From the geoprocessing History, double-click the Describe Spatial Statistics Model File tool.

- Click Run, and

click View Details.

The details are updated.

You have confirmed that the variable descriptions and units are now correctly documented and the model file is ready to be shared by email, on a shared drive, or online. You can keep this model file to run a different prediction in the future or share it with others who may want to run additional predictions. For example, this prediction used Ecological Marine Units (EMU) decadal average (50-year mean) data, but another researcher may want to predict using projected ocean measurements to understand how seagrass distributions might change under warming ocean conditions.

- Close the Details window.

In this tutorial, you prepared training data and created a machine learning model to predict seagrass habitats in coastal regions around the world. You also made your analysis reproducible and extendable by documenting the model file to be shared with others who want to replicate or build on your work. Promoting open science is an important part of conservation efforts, including for seagrasses and the ecosystems they support. This tutorial used a simplified approach to seagrass modeling and in some cases, tool parameter settings were optimized for speed of processing. The following resources provide more information about real-world efforts to model seagrass habitat:

- Aydin, Orhun, Carlos Osorio-Murillo, Kevin A. Butler, and Dawn Wright. 2022. "Conservation Planning Implications of Modeling Seagrass Habitats with Sparse Absence Data: A Balanced Random Forest Approach." Journal of Coastal Conservation 26 (3): 22. https://doi.org/10.1007/s11852-022-00868-1.

- Bertelli, Chiara M., Holly J. Stokes, James C. Bull, and Richard K. F. Unsworth. 2022. "The Use of Habitat Suitability Modelling for Seagrass: A Review." Frontiers in Marine Science 9. https://www.frontiersin.org/articles/10.3389/fmars.2022.997831.

- McKenzie, Len J., Lina M. Nordlund, Benjamin L. Jones, Leanne C. Cullen-Unsworth, Chris Roelfsema, and Richard K. F. Unsworth. 2020. "The Global Distribution of Seagrass Meadows." Environmental Research Letters 15 (7): 074041. https://doi.org/10.1088/1748-9326/ab7d06.

- Wang, Ming, Yong Wang, Guangliang Liu, Yuhu Chen, and Naijing Yu. 2022. "Potential Distribution of Seagrass Meadows Based on the MaxEnt Model in Chinese Coastal Waters." Journal of Ocean University of China 21 (5): 1351–61. https://doi.org/10.1007/s11802-022-5006-2.