Set up the project and the imagery

To get started, you'll set up the project and the imagery. You'll download and explore the data, create an ArcGIS Pro project, set up the environment, and create a Reality mapping workspace.

Note:

To work with Reality for ArcGIS Pro, the following software must be installed and licensed in the following order:

- ArcGIS Pro Standard or Advanced, version 3.1 or later

- ArcGIS Reality Studio

- ArcGIS Reality for ArcGIS Pro extension

- ArcGIS Coordinate System Data

This tutorial assumes that these steps were already completed. For step-by-step instructions, see the Install ArcGIS Reality for ArcGIS Pro page.

Download and explore the data

First, you'll download the data you need for this tutorial and review it.

- Download the Orlando_Data.zip file and locate the downloaded file on your computer.

Note:

This file is close to 4 GB, so it might take a few moments to download.

Most web browsers download files to your computer's Downloads folder by default.

- Right-click the Orlando_Data.zip file and unzip it to the C:\Sample_Data location.

Caution:

The path for the data should be exactly C:\Sample_Data\Orlando_Data. If you save the data to a different location on your computer, you need to update the path in each of the entries in the Orlando_Frames_Table.csv file.

- Open the extracted Orlando_Data folder and examine its contents.

It contains five subfolders: AOI, DEM, Frames, Images, and Output.

- Open the Images folder.

This folder contains all the aerial imagery to be processed in the form of 136 TIFF files.

- Scroll down through the folder and identify an image that contains the word Cam1 in its name, such as 0000123-000275-091314134101-Cam1.tif. Double-click it to open it in your default image viewing application.

All of the Cam1 images are nadir, meaning they were collected with the optical axis of the camera perpendicular to the ground (vertically). They provide good coverage for the top of the features present in the landscape, such as the roofs of buildings.

Note:

This imagery was provided by Lead'Air Inc. It is high-quality digital aerial imagery captured with MIDAS sensors. Learn more about Lead'Air Inc.

- Close the window displaying the image.

- Double-click one of the images whose names contain the words Cam2, Cam3, Cam4, or Cam5, such as 0000168-000245-091314134426-Cam2.tif.

These images are oblique, meaning they were captured with the optical axis of the camera inclined (at an angle). They provide good coverage for the sides of the features, such as the sides of the buildings. 3D product generation requires overlapping nadir and oblique imagery. It is important to use high-quality imagery to obtain good results.

Note:

Cam1, Cam2, Cam3, Cam4, and Cam5 are short for Camera 1, Camera 2, Camera 3, and so on.

- Optionally, open and review more images from the various cameras.

- Close all the windows displaying images.

- Browse to Orlando_Data and open the Frames folder.

The .csv files in this folder contain information about the position of the images in space and the cameras used to capture them.

- Double-click the Orlando_Frames_Table.csv file to open it in your default CSV viewing software.

The file contains information about the images to be processed in tabular format. Each row describes one image, including the path to the image on disk (column A), object ID (column B), image center coordinates (columns D, E, and F), and the spatial referencing system associated with those coordinates (column C). Columns G, H, and I indicate the rotation angles, and column K indicates the camera that captured the image.

- Close the Orlando_Frames_Table.csv file. Open Orlando_Cameras_Table.csv.

This table contains information specific to the five cameras used to capture the imagery:

- Camera ID – the name or model of the camera used to capture the images.

- Focal Length – the distance between the lens of the camera and the focal plane (in microns).

- Principal X and Principal Y - the x and y coordinates for the principal point of autocollimation (in microns).

- Pixel Size – the camera pixel size (in microns).

- Konrady – the camera distortion parameters.

- Close the Orlando_Cameras_Table.csv file.

- Browse back to Orlando_Data.

The remaining folders contain the following information:

- In the AOI folder, a feature class provides the boundaries for the area of interest for the workflow.

- In the DEM folder, a digital elevation model raster provides elevation information for the area where the imagery was captured. That information will be used to determine the flying height for each image.

- The Output folder contains the resulting output of this tutorial. Optionally, you'll be able to use them later in the workflow.

Create a project and connect to the data

Now that you've downloaded the data and explored it, you'll create an ArcGIS Pro project and connect it to the data.

- Start ArcGIS Pro. If prompted, sign in using your licensed ArcGIS organizational account.

Note:

If you don't have access to ArcGIS Pro or an ArcGIS organizational account, see options for software access.

- On the ArcGIS Pro start screen, under New Project, click Map.

- In the Create a New Project window, for Name, type Orlando_3D_products.

- For Location, accept the default or click the Browse button to choose a location of your choice on your drive.

Note:

Ensure that the location you choose has at least 20 gigabytes (GB) of available storage space.

- Click OK.

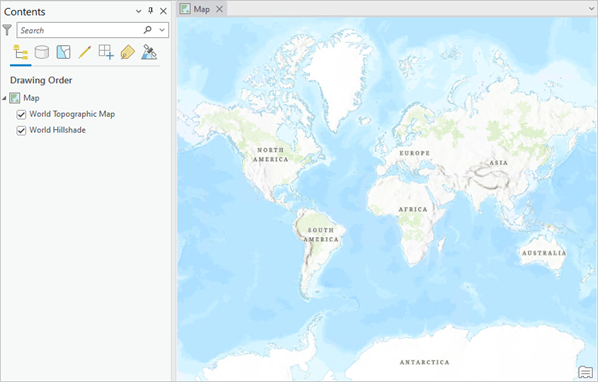

The project opens and displays a map view.

Next, you'll connect the project to the data you downloaded.

- On the ribbon, click the View tab. In the Windows group, click Catalog Pane.

The Catalog pane appears. This pane contains all the folders, files, and data associated with the project. You'll use this pane to establish a folder connection to the Orlando_Data folder.

- In the Catalog pane, click the arrow next to Folders to expand it and view its content.

The default folder associated with the project is Orlando_3D_products, a folder that was made when you created the project. For now, the folder contains some empty geodatabases and toolboxes, but no data.

- Right-click Folders and choose Add Folder Connection.

- In the Add Folder Connection window, click Computer and browse to C:\Sample_Data. Select the Orlando_Data folder and click OK.

In the Catalog pane, under Folders, the Orlando_Data folder is now listed.

- Expand the Orlando_Data folder and confirm that it contains the imagery and other data your saw earlier.

You can now access the aerial imagery and other data needed for the workflow inside your ArcGIS Pro project.

Set up the environment

Next, you'll choose specific environment parameter values that relate to imagery and that the system will take into account when running imagery tools.

- On the ribbon, on the Analysis tab, in the Geoprocessing group, click Environments.

- Under Parallel Processing, for Parallel Processing Factor, type 90%.

The parallel processing factor defines the percentage of your computer cores to be used to support the processing. For example, on a 4-core machine, setting 50 percent means the operation will be spread over 2 processes (50% * 4 = 2). In this workflow, you are choosing 90%.

Tip:

Make sure to include the % sign in 90%.

- Scroll down to Raster Storage.

- Under Raster Statistics, for X skip factor and Y skip factor, type 10.

Statistics must be computed on imagery to enable certain tasks such as applying a contrast stretch. For efficiency, statistics can be generated for a sample of pixels instead of every pixel. The skip factor determines the sample size. 10 in X and 10 in Y means that every eleventh pixel in the image row and column will be used to generate statistics.

- Under Tile Size, for Width and Height, type 512.

For efficiency, imagery is often accessed in the form of small square fragments named tiles. This parameter defines the tile size, which you choose to be 512 by 512 pixels.

- For Resampling Method, choose Bilinear.

Resampling is the process used to change a raster's cell size or orientation. Among different resampling methods available, Bilinear is recommended when working with imagery data.

- Accept all other defaults and click OK.

Create a workspace

Next, you'll create a Reality mapping workspace to gather and manage all your data, including the aerial images and the frames and cameras tables.

- On the ribbon, on the Imagery tab, in the Reality Mapping group, click the New Workspace button.

The New Reality Mapping Workspace wizard pane appears, showing the Workspace Configuration page.

- Set the following parameter values:

- For Name, type Orlando_Workspace.

- For Workspace Type, confirm that Reality Mapping is selected.

- For Sensor Data Type, choose Aerial – Digital.

- For Scenario Type, choose Oblique.

- Accept all other defaults.

Note:

Since you'll use both nadir and oblique imagery for your workflow, for Scenario Type, you should choose Oblique.

- Click Next.

You go to the Image Collection page, where you'll enter parameters related to the sensor used to capture the images.

- In the Image Collection pane, for Sensor Type, confirm that Generic Frame Camera is selected.

Next, you'll provide the frames table file.

- Under Source Data 1, for Exterior Orientation File / Esri Frames Table, click the Frames Table button.

- In the Frames Table window, browse to Folders > Orlando_Data > Frames. Select Orlando_Frames_Table.csv and click OK.

The Image Collection pane updates with some of the information provided by the frames table file, such as the spatial referencing information associated with the image center coordinates and the list of five cameras. You now need to import the information about the cameras provided in the cameras table file.

- Next to Cameras, click the Import button.

- In the Cameras Table window, browse to Folders > Orlando_Data > Frames. Select Orlando_Cameras_Table.csv and click OK.

A green check mark next to each camera ID indicates that the camera information was successfully imported.

- Accept all other defaults and click Next.

Next, you'll point to the DEM you'll use in the workflow.

- In the Data Loader Options pane, under DEM, click the Select DEM button.

- In the Input Dataset window, browse to Folders > Orlando_Data > DEM. Select North_Downtown_DEM.tif and click OK.

- In the wizard pane, accept the other defaults and click Finish.

After a few minutes, the workspace is created. In the Logs: Orlando_Workspace window, the last line indicates that the process succeeded.

A new Orlando_Workspace 2D map is also created.

Various workspace components are now listed in the Contents pane. This includes Image Collection, a new mosaic dataset that contains all 136 aerial images.

The Image Collection dataset is represented primarily with a Footprint layer (green outlines) and an Image layer containing the images themselves. The two layers are displayed on the map.

Tip:

If you can't see the images on the map, zoom in further.

By default, only the first 20 images from the dataset are displayed. This number can be changed, but choosing a large number could impact the display's performance.

If you want to change that default number, in the Catalog pane, browse to Folders > Orlando_3D_products > RealityMapping > Orlando_Workspace.ermw > Imagery > Orlando_Workspace.gdb. Right-click Orlando_Workspace_Collection and choose Properties. Click the Defaults tab. For Maximum Number of Rasters Per Mosaic, type the number of your choice.

Furthermore, a Reality Mapping tab has now been added on the ribbon.

- On the ribbon, click the Reality Mapping tab.

The tab contains a series of tools that support imagery alignment and the production of 2D and 3D products. Currently, the tools in the Product group are unavailable because your input images have not yet been adjusted.

- On the Quick Access Toolbar, click the Save Project button to save your project.

In the first part of this workflow, you downloaded the input data, set up an ArcGIS Pro project, created a Reality mapping workspace, and populated it with input data. In the second part of the workflow, you'll perform the image alignment and generate 3D products.

Process the imagery

Now that your project, workspace, and imagery are set up, you'll start the imagery processing. First, you'll improve the image alignment using tie points. Then, you'll generate a dense point cloud and a high-fidelity 3D mesh.

Improve image alignment with tie points

To improve the relative accuracy of your input images, you'll use tie points, which are common objects or locations identified in the overlap areas between adjacent images. The Adjust tool automatically extracts tie points using image matching techniques and uses them to better align the images relative to one another.

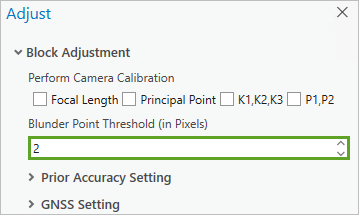

- On the ribbon, on the Reality Mapping tab, in the Adjust group, click the Adjust button.

You'll set some of the Adjust tool parameters that determine the quality and precision of the tie points and image alignment process.

- In the Adjust window, for Blunder Point Threshold (in Pixels), type 2.

This means any tie point with a residual error (or discrepancy) of two pixels or more will be excluded from the alignment process.

- Click the arrow next to Tie Point Matching to expand it.

- Uncheck Full Frame Pairwise Matching.

- For Tie Point Similarity, choose High.

- Check Full Frame Pairwise Matching.

- Accept all other default values and click Run.

The process might take several minutes to run. You can follow the progress in the Logs window. The tool will first report to be Computing the tie points, then Computing block adjustment, and finally Applying block adjustment. For the purpose of the alignment process, the images are grouped into blocks of several images. The position of the blocks is then adjusted.

- When the process has completed, in the Logs window, identify the MeanReprojectionError(pixel) line.

This line gives an indication of the accuracy of the adjustment. A mean reprojection error of less than one pixel is acceptable.

Note:

The accuracy number you obtain might be slightly different from the one in the example image.

- In the Contents pane, check the box next to the Tie Points layer to turn it on.

On the map, all of the tie points identified by the Adjust tool appear.

You have now optimized the relative accuracy of your images.

Note:

Optionally, you can also improve the absolute accuracy of the image positioning by using ground control points. This step is beyond the scope of this tutorial, but you can learn more on the Add ground control points to a Reality mapping workspace page.

Generate 3D products

Next, you'll generate the 3D products. To ensure the workflow's brevity, you'll only generate these 3D products for a small area. The Orlando_Small_AOI.shp layer provides the boundaries for that area of interest. You'll add it to the map to examine it.

- In the Catalog pane, expand Folders, Orlando_Data, and AOI. Right-click Orlando_Small_AOI.shp and choose Add To Current Map.

On the map, the AOI polygon appears in a randomly assigned color (purple in the following example image).

The image coverage is significantly larger than the AOI polygon. This ensures that all images that have any overlap with the AOI are included. The overlapping images must all be used to generate high-quality results.

- In the Contents pane, turn off the Orlando_Small_AOI layer.

- On the ribbon, on the Reality Mapping tab, review the Product group.

Following the image adjustment process, some tools within that group are now available. Products can be generated individually using the individual product buttons (such as Point Cloud or 3D Mesh) or simultaneously using the Multiple Products button. You'll use the latter option.

Note:

Currently, the 2D product buttons (such as DSM, True Ortho, and DSM Mesh) are still unavailable because your workspace contains oblique imagery, which is not appropriate for 2D product generation.

- On the Reality Mapping tab, click the Multiple Products button.

The Reality Mapping Products Wizard appears, showing the Product Generation Settings page.

- Confirm that the Point Cloud and 3D Mesh products are selected.

- Click Shared Advanced Settings.

The Advanced Product Settings window appears. It allows you to set parameters that affect all products to be generated.

- In the Advanced Product Settings window, for Quality, confirm that Ultra is selected.

This parameter will result in derived products having the highest image resolution. A Quality setting of High, Medium, or Low will result in products having resolutions two times, four times, or eight times the source image resolution.

- For Scenario Type, confirm that Oblique is selected.

This Oblique scenario type was selected during the workspace creation phase. It was chosen because your dataset includes oblique images, which are needed to produce 3D products.

- For Product Boundary, click the Browse button.

- In the Product Boundary window, browse to Folders > Orlando_Data > AOI. Select Orlando_Small_AOI.shp and click OK.

The 3D products generated will be limited to the extent defined by the AOI feature class.

- Check Apply Global Color Balancing.

Sometimes there can be large tone variations between images. This option will ensure that the tonal transition from image to image will be seamless.

- Click OK.

- In the Reality Mapping Products Wizard pane, on the Product Generation Settings page, click Next.

- On the 3D Mesh Settings page, accept the default values.

Note:

Depending on your system resources, the 3D product generation process may take two and a half hours or more. For reference, two and a half hours was the processing time on a computer with an i7 processor, 32GB RAM and an SSD hard drive.

If you prefer not to run this process to save time, you can use ready-made output datasets for the rest of the tutorial. In the Catalog pane, browse to Folders > Orlando_Data > Output > Point Cloud. Right-click Point Cloud and choose Add To Current Map.

If you choose to use the ready-made output dataset, proceed to the next section, Examine 3D products.

- If you decide to run the process, click Finish.

During the process, more status information is displayed in the Logs window. When the process is complete, the log indicates that the process succeeded.

Examine 3D products

You have now generated 3D products (or alternatively, you may have chosen to use the ready-made 3D products). Next, you'll examine them. First, you'll review the point cloud.

- In the Contents pane, under Data Products, turn on the Point Cloud layer and expand it to see its symbology.

Tip:

If you are using ready-made 3D products, the Point Cloud.lasd layer appears under Reference Data.

The point cloud footprint appears outlined in red.

Note:

This dataset is in the LAS format, commonly used to store point cloud data. Learn more about LAS datasets.

- In the Contents pane, turn off the Footprint and Image layers to better see the point cloud.

- Right-click the Point Cloud layer and choose Zoom To Layer.

- On the map, if necessary, zoom in until you see the points of the Point Cloud layer.

The lowest elevation points appear in blue, the medium elevation points in yellow, and the highest elevation points in red.

- Optionally, zoom in further and pan to examine the layer.

In the 2D map, the visualization of the 3D point cloud is limited. Next, you'll visualize that dataset in a 3D scene.

- In the Catalog pane, expand Folders, Orlando_3D_products, Reality Mapping, Orlando_Workspace.ermw, Products, Reality, and Point_Cloud. Right-click Point_Cloud.lasd, point to Add To New, and choose Local Scene.

Tip:

If you are using the ready-made 3D products, go to Folders > Orlando_Data > Output > Point_Cloud.

The point cloud appears in a new scene, using the same symbology as before, but now displayed in 3D.

To better explore the point cloud layer, you'll tilt and rotate the scene with the Navigator.

- On the scene, locate the Navigator. Click the arrow to access the 3D navigation functionality.

The Navigator changes to a 3D sphere and an additional wheel appears for 3D navigation.

- In the expanded Navigator, use the middle wheel to tilt and rotate the scene. Use the mouse scrolling wheel to zoom in and out.

Tip:

Alternatively, you can also navigate the scene with the keyboard, pressing the following keys: V to tilt, B to rotate, C to pan, and Z to zoom, used in combination with the Up, Down, Left, and Right arrow keys.

- Explore the Point_Cloud.lasd layer, looking at it from various angles.

The layer delineates buildings, vegetation, and ground details.

- Zoom in and confirm that the layer is made of points at various levels of elevation.

- When you are done exploring, in the Contents pane, right-click the Point_Cloud.lasd layer and choose Zoom To Layer.

Next, you'll review the 3D mesh product.

- In the Catalog pane, under Reality, expand the Mesh and slpk folders. Right-click Mesh.slpk and choose Add To Current Map.

Tip:

If you are using the ready-made 3D products, go to Folders > Orlando_Data > Output > Mesh.

The mesh appears superimposed on the point clouds.

- In the Contents pane, turn off the Point_Cloud.lasd layer.

- Tilt and rotate the scene. Zoom in and out to observe the 3D mesh's photo-realistic details.

- On the Quick Access Toolbar, click the Save Project button to save your project.

To expand access to such output 3D datasets, you can publish them as an online scene to your organization's ArcGIS Online account. You saw such an online scene example at the beginning of this tutorial. Learn more on the Publish hosted scene layers page. The 3D datasets can also be integrated in various projects and combined with other GIS or BIM layers.

In this tutorial, you generated a dense point cloud and a photo-realistic 3D mesh using high-resolution, overlapping oblique and nadir imagery covering a section of Orlando. You downloaded the input data, created an ArcGIS Pro project, and created a Reality mapping workspace where you loaded the imagery. You then improved the relative accuracy of the image alignment using automatically generated tie points. Finally, you used the Multiple Products wizard to generate 3D products and viewed them in a scene.

You can find more tutorials in the tutorial gallery.