Segment the imagery

Pervious surfaces let water go through and impervious surfaces don't. Pervious surfaces include vegetation, water bodies, and bare soil, while impervious surfaces are generally human-made, such as buildings, roads, parking lots, brick, or asphalt. To determine which parts of the ground are pervious and impervious, you'll classify the imagery into land-use types. Using multispectral imagery for this kind of classification works well because each land-use type tends to have unique spectral characteristics, also called a spectral signature.

However, if you try to classify an image in which almost every pixel has a unique combination of spectral characteristics, you are likely to encounter errors and inaccuracies. Instead, you'll group pixels into segments, which will generalize the image and significantly reduce the number of spectral signatures to classify. Once you segment the imagery, you'll perform a supervised classification of the segments. You'll first classify the image into broad land-use types, such as roofs or vegetation. Then, you'll reclassify those land-use types into either impervious or pervious surfaces.

Before you classify the imagery, you'll change the band combination to distinguish features clearly.

Download the data

To get started, you'll download data supplied by the local government of Louisville, Kentucky. This data includes imagery of the study area and land parcel features.

- Download the Surface_Imperviousness.zip file that contains your project and its data.

-

Locate the downloaded file on your computer.

Note:

Depending on your web browser, you may have been prompted to choose the file's location before you began the download. Most browsers download to your computer's Downloads folder by default.

- Right-click the file and extract it to a location you can easily find, such as your Documents folder.

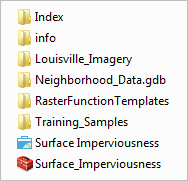

- Open the Surface_Imperviousness folder.

The folder contains several subfolders, an ArcGIS Pro project file (.aprx), and an ArcGIS toolbox (.tbx). Before you explore the other data, you'll open the project file.

- If you have ArcGIS Pro installed on your machine, double-click Surface Imperviousness (without the underscore) to open the project file. If prompted, sign in using your licensed ArcGIS account.

Note:

If you don't have access to ArcGIS Pro or an ArcGIS organizational account, see options for software access.

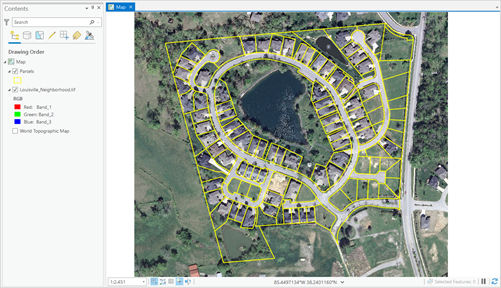

The project contains a map of a neighborhood near Louisville, Kentucky. The map includes Louisville_Neighborhood.tif, a 6-inch resolution image, 4-band aerial photograph of the area, and the Parcels layer, a feature class of land parcels. The Louisville_Neighborhood.tif imagery comes from the United States National Agriculture Imagery Program (NAIP).

Next, you'll look extract specific spectral bands from the imagery.

Extract spectral bands

The multiband imagery of the Louisville neighborhood currently uses the natural color band combination to display the imagery the way the human eye would see it. You'll change the band combination to better distinguish urban features such as concrete from natural features such as vegetation. While you can change the band combination by right-clicking the bands in the Contents pane, later parts of the workflow will require you to use imagery with only three bands. So you'll create an image by extracting the three bands that you want to show from the original image.

- In the Contents pane, click the Louisville_Neighborhood.tif layer to select it.

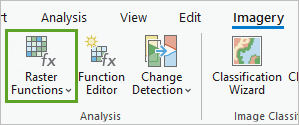

- On the ribbon, click the Imagery tab. In the Analysis group, click the Raster Functions button.

The Raster Functions pane appears.

Raster functions apply an operation to a raster image on the fly, meaning that the original data is unchanged and no new dataset is created. The output takes the form of a layer that exists only in the project in which the raster function was run. You'll use the Extract Bands function to create an image with only three bands to distinguish between impervious and pervious surfaces.

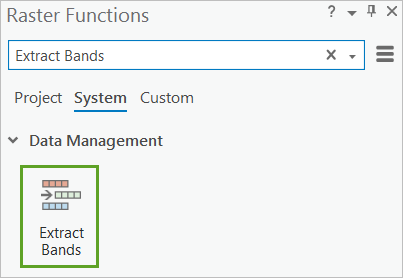

- In the Raster Functions pane, search for Extract Bands. Click the Extract Bands function.

The Extract Bands Properties function appears.

The bands you extract will include Near Infrared (Band 4), which emphasizes vegetation; Red (Band 1), which emphasizes human-made objects and vegetation; and Blue (Band 3), which emphasizes water bodies.

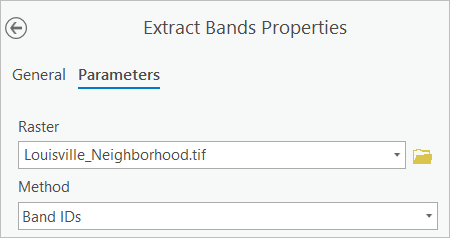

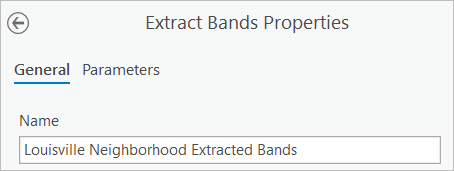

- In the Parameters tab, for Raster, choose the Louisville_Neighborhood.tif image. Confirm that Method is set to Band IDs.

The Method parameter determines the type of keyword used to refer to bands when you enter the band combination. For this data, Band IDs (a single number for each band) is the simplest way to refer to each band.

- For Combination, delete the existing text and type 4 1 3 (with spaces). Confirm that Missing Band Action is set to Best Match.

The Missing Band Action parameter specifies what action occurs if a band listed for extraction is unavailable in the image. Best Match chooses the best available band to use.

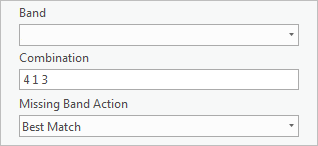

- Click the General tab. For Name, type Louisville Neighborhood Extracted Bands.

- Click Create new layer.

The new layer, named Louisville Neighborhood Extracted Bands_Louisville_Neighborhood.tif, is added to the map. It displays only the extracted bands. You'll rename the layer.

- In the Contents pane, right-click Louisville Neighborhood Extracted Bands_Louisville_Neighborhood.tif and choose Properties.

- In the Layer Properties window, for Name, type Louisville Neighborhood Extracted Bands. Click OK.

The yellow Parcels layer covers the imagery and can make some features difficult to see. You won't use the Parcels layer until later in the project, so you'll turn it off for now.

- In the Contents pane, uncheck the box next to the Parcels layer to turn it off.

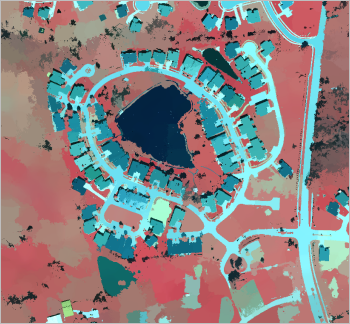

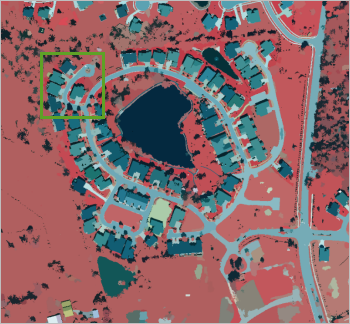

The Louisville Neighborhood Extracted Bands layer shows the imagery with the band combination that you chose (4 1 3). Vegetation appears as red, roads appear as gray, and roofs appear as shades of gray or blue. By emphasizing the difference between natural and human-made surfaces, you can more easily classify them later.

Caution:

Although the Louisville Neighborhood Extracted Bands layer appears in the Contents pane, it has not been added as data to any of your folders. If you remove the layer from the map, you'll delete the layer permanently.

Configure the Classification Wizard

Next, you'll open the Classification Wizard and configure its default parameters. The Classification Wizard walks you through the steps for image segmentation and classification.

- In the Contents pane, make sure that the Louisville Neighborhood Extracted Bands layer is selected.

- On the ribbon, on the Imagery tab, in the Image Classification group, click Classification Wizard.

Note:

If you want to open the individual tools available in the wizard, you can access them from the same tab. In the Image Classification group, click Classification Tools and choose the tool you want.

The Image Classification Wizard pane appears.

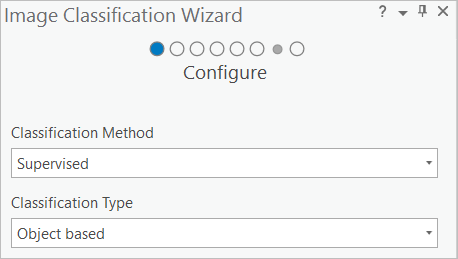

The wizard's first page (indicated by the blue circle at the top of the wizard) contains several basic parameters that determine the type of classification to perform. These parameters affect which subsequent steps will appear in the wizard. You'll use the supervised classification method. This method is based on user-defined training samples, which indicate what types of pixels or segments should be classified in what way. (An unsupervised classification, by contrast, relies on the software to decide classifications based on algorithms.)

- In the Image Classification Wizard pane, on the Configure page, confirm that Classification Method is set to Supervised and that Classification Type is set to Object based.

The object based classification type uses a process called segmentation to group neighboring pixels based on the similarity of their spectral characteristics.

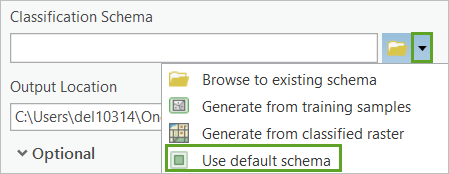

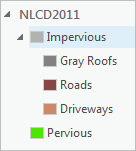

Next, you'll choose the classification schema. The classification schema is a file that specifies the classes that will be used in the classification. A schema is saved in an Esri classification schema (.ecs) file, which uses JSON syntax. For this workflow, you'll modify the default schema, NLCD2011. This schema is based on land cover types used by the United States Geological Survey.

- For Classification Schema, click the drop-down arrow and choose Use default schema.

The next parameter determines the Output Location value, which is the workspace that stores all the outputs created in the wizard. These outputs include training data, segmented images, custom schemas, accuracy assessment information, intermediate outputs, and resulting classification results.

- Confirm that Output Location is set to Neighborhood_Data.gdb.

Under Optional, you won't enter anything for Segmented Image, Training Samples, or Reference Dataset because you don't have any of these elements created ahead of time.

- Click Next.

The next page of the Image Classification Wizard focuses on segmentation.

Segment the image

You'll now choose the parameters for segmentation. The segmentation process groups adjacent pixels with similar spectral characteristics into segments. Doing so will generalize the image and make it easier to classify. Instead of classifying thousands of pixels with unique spectral signatures, you'll classify a much smaller number of segments. The optimal number of segments and the range of pixels grouped into a segment change depending on the image size and the intended use of the image. You'll set the segmentation parameters to best fit your imagery and classification goals.

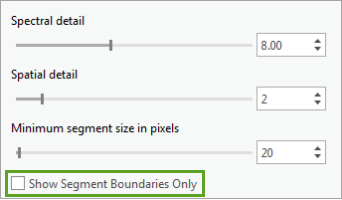

- On the Segmentation page, for Spectral detail, replace the default value with 8.

The Spectral detail parameter sets the level of importance given to spectral differences between pixels on a scale of 1 to 20. A higher value means that pixels must be more similar to be grouped together, creating a higher number of segments. A lower value creates fewer segments. Because you want to distinguish between pervious and impervious surfaces (which generally have very different spectral signatures), you chose a lower value.

- For Spatial detail, replace the default value with 2.

The Spatial detail parameter sets the level of importance given to the proximity between pixels on a scale of 1 to 20. A higher value means that pixels must be closer to each other to be grouped together, creating a higher number of segments. A lower value creates fewer segments that are more uniform throughout the image. You chose a low value because not all similar features in your imagery are clustered together. For example, houses and roads are not always close together and are located throughout the full image extent.

- For Minimum segment size in pixels, confirm that the value is 20.

Unlike the other parameters, the Minimum segment size in pixels parameter is not on a scale of 1 to 20 and can take any value. Segments with fewer pixels than the value specified in this parameter will be merged into a neighboring segment. You don't want segments that are too small, but you also don't want to merge pervious and impervious segments into one segment. The default value is acceptable in this case.

- Confirm that Show Segment Boundaries Only is unchecked.

The Show Segment Boundaries Only parameter determines whether the segments are displayed with black boundary lines. This is useful for distinguishing adjacent segments with similar colors but may make smaller segments more difficult to see. Some of the features in the image, such as the houses or driveways, are fairly small, so you leave this parameter unchecked.

- Click Next.

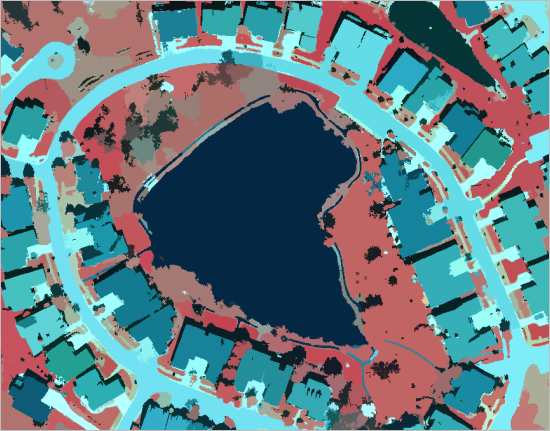

A preview of the segmentation is added to the map. It is also added to the Contents pane with the name Preview_Segmented.

At first sight, the output layer does not appear to have been segmented the way you wanted. Features such as vegetation seem to have been grouped into many segments that blur together, especially on the left side of the image. Tiny segments that seem to encompass only a handful of pixels dot the area as well. However, this image is being generated on the fly, which means the processing will change based on the map extent. At full extent, the image is generalized to save time. You'll zoom in to reduce the generalization, so you can better see what the segmentation looks like with the parameters you chose.

- With the mouse wheel, zoom to the neighborhood in the middle of the image.

The preview segmentation runs again. With a smaller map extent, the segmentation more accurately reflects the parameters you used, with fewer segments and smoother outputs.

Note:

If you are unhappy with how the segmentation turned out, you can return to the previous page of the wizard and adjust the parameters. The segmentation is only previewed on the fly because it can take a long time to process the actual segmentation, so it is good practice to test different combinations of parameters until you find a result you like.

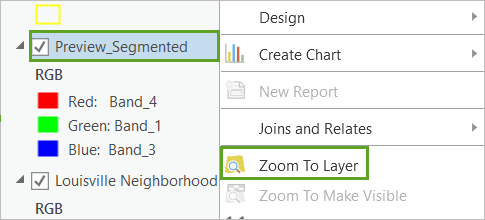

- In the Contents pane, right-click Preview_Segmented and choose Zoom To Layer to return to the full extent.

- On the Quick Access Toolbar, click the Save Project button to save the project.

Note:

A message may appear warning you that saving this project file with the current ArcGIS Pro version will prevent you from opening it again in an earlier version. If you see this message, click Yes to proceed.

The project is saved.

Caution:

Saving the project does not save your location in the wizard. If you close the project before you complete the entire wizard, you'll lose your spot and have to start the wizard over from the beginning.

You have extracted spectral bands to emphasize the distinction between pervious and impervious features. You also set up the segmentation parameters to group pixels with similar spectral characteristics into segments and simplify the image. Next, you'll classify the imagery by perviousness or imperviousness.

Classify the imagery

Next, you'll set up the classification of the image. A supervised classification is based on user-defined training samples, which indicate what types of pixels or segments should be classified in what way. (An unsupervised classification, by contrast, relies on the software to decide classifications based on algorithms.) You'll first classify the image into broad land-use types, such as vegetation or roads. Then, you'll reclassify those land-use types into either pervious or impervious surfaces.

Create training sample classes

To perform a supervised classification, you need training samples. Training samples are polygons that represent distinct sample areas of the different land-cover types in the imagery. The training samples inform the classification tool about the variety of spectral characteristics that each land cover can exhibit.

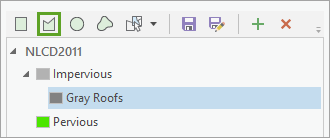

First, you'll modify the default schema to contain two parent classes: Impervious and Pervious.

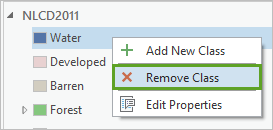

- In the Image Classification Wizard pane, on the Training Samples Manager page, right-click Water and choose Remove Class.

- In the Remove Class window, click Yes.

- Remove the other seven default classes (Developed, Barren, Forest, Shrubland, Herbaceous, Planted / Cultivated, and Wetlands).

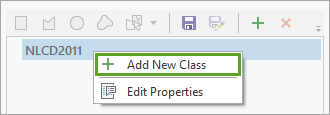

- Right-click NLCD2011 and choose Add New Class.

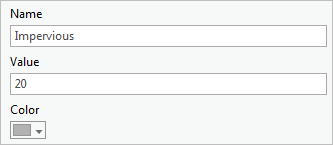

- On the Add New Class page, set the following parameters:

- For Name, type Impervious.

- For Value, type 20.

- For Color, choose Gray 30%.

Tip:

To see the name of a color, point to the color in the color palette selector and the color name will appear.

The value 20 is the number that will be attributed to all segments identified as impervious through the classification process. It is more of a numeric label and is not intended to be used in any calculations.

- Click OK.

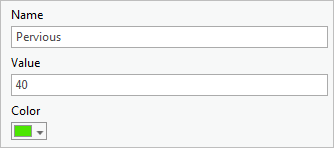

- Right-click NLCD2011 and choose Add New Class. On the Add New Class page, set the following parameters:

- For Name, type Pervious.

- For Value, type 40.

- For Color, choose Quetzal Green.

- Click OK.

Create subclasses and training samples

Next, you'll add subclasses to each class that represent types of land cover. If you attempted to classify the segmented image into only pervious and impervious surfaces, the classification would be too generalized and likely have many errors. By classifying the image based on more specific land-use types, you'll create a more accurate classification. Later, you'll reclassify these subclasses into their parent classes.

First, you'll add a subclass for gray roof surfaces and create training samples for it. Then, you'll create additional subclasses and create training samples for them, too.

- Right-click the Impervious parent class and choose Add New Class. Add a class named Gray Roofs with a value of 21 and a color of Gray 50%.

In this tutorial, you won't create other roof types. However, in a project with more diverse buildings represented in the imagery, you might consider creating a red roof land-use type, since their spectral characteristics are different from gray roofs.

Next, you'll create a training sample for this subclass.

- Confirm the Gray Roofs class is selected. Click the Polygon button.

- Zoom to the cul-de-sac to the northwest of the neighborhood.

Tip:

While the Polygon tool is on, you can navigate the map by holding down the C key and using the mouse to zoom and pan.

- On the northernmost roof in the cul-de-sac, draw a polygon. Double-click to finish the drawing. Make sure the polygon covers only pixels that comprise the roof.

Note:

The polygon does not need to cover the entire roof. It just needs to be a sample of the roof. Most importantly, it should only include roof material.

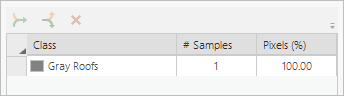

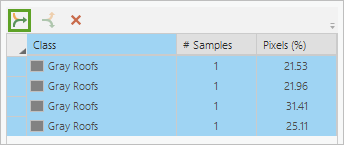

A row is added to the wizard for your new training sample.

When creating training samples, you want to cover a high number of pixels for each land-use type. For this tutorial, having about six samples for each land-use type will be enough, but for a real project, where the imagery covers a much larger extent, you might need significantly more samples.

You'll create more training samples to represent the roofs of the houses.

- Draw additional rectangles on some nearby houses.

Every training sample that you make is added to the wizard. Although you have only drawn training samples on roofs, each training sample currently exists as its own class. You'll eventually want all gray roofs to be classified as the same value, so you'll merge the training samples that you created into one class.

- In the wizard, click the first row to select it. Press Shift and click the last row to select all training samples.

- Above the list of training samples, click the Collapse button.

The training samples collapse into one class. You can continue to add more training samples for gray roofs and merge them into the Gray Roofs class. The optimum strategy is to gather samples throughout the entire image.

Next, you'll add more land-use types.

- Right-click Impervious and choose Add New Class to create two more impervious subclasses based on the following table (the colors don't have to be a perfect

match):

Subclass Value Color Roads

22

Cordovan Brown

Driveways

23

Nubuck Tan

- Right-click Pervious and choose Add New Class to create five pervious subclasses based on the following table:

Subclass Value Color Bare Earth

41

Medium Yellow

Grass

42

Medium Apple

Trees

43

Leaf Green

Water

44

Cretan Blue

Shadows

45

Sahara Sand

Note:

The eight subclasses you've created are specific to the land-use types for this image. Images of different locations may have different types of land-use or ground features that should be represented in a classification.

Shadows are not actual surfaces and cannot be either pervious or impervious. However, shadows are usually cast by tall objects such as houses or trees and are more likely to cover grass or bare earth, which are pervious surfaces.

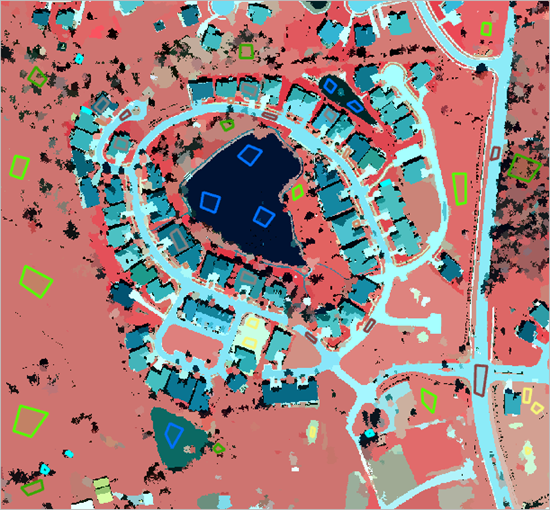

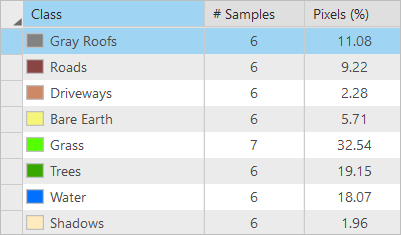

- Draw about six or seven training samples for each land-use type throughout the whole image. Zoom and pan throughout the image as needed.

Tip:

You can also turn the Preview_Segmented layer off and on to see the Louisville Neighborhood Extracted Bands layer to make better sense of the landscape.

- Collapse training samples that represent the same types of land use into one class.

Note:

The numbers associated with each class will differ depending on how many samples you drew and how large they were.

- When you are satisfied with your training samples, at the top of the Training Samples Manager pane, click the Save button.

Your customized classification schema is saved in case you want to use it again.

- Click Next.

Classify the image

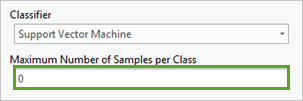

Now that you have created the training samples, you'll choose the classification method. Each classification method uses a different statistical process involving your training samples. You'll use the Support Vector Machine classifier, which can handle larger images and is less susceptible to discrepancies in your training samples. Then, you'll train the classifier with your training samples and create a classifier definition file. This file will be used during the classification. Once you create the file, you'll classify the image. Lastly, you'll reclassify the pervious and impervious subclasses into their parent classes, creating a raster with only two classes.

- In the Image Classification Wizard pane, on the Train page, confirm that Classifier is set to Support Vector Machine.

For the next parameter, you can specify the maximum number of samples to use for defining each class. You want to use all your training samples, so you'll change the maximum number of samples per class to 0. Changing the maximum to 0 is a trick to ensure all training samples are used.

- For Maximum Number of Samples per Class, type 0.

Next, you'll train the classifier and display a preview.

- Accept the other default parameter values and click Run.

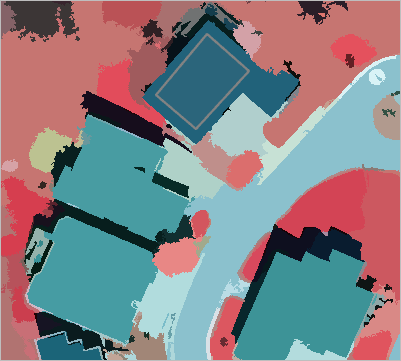

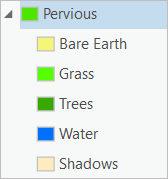

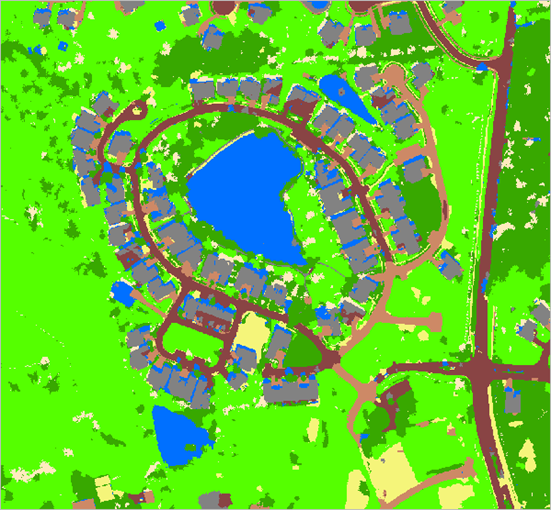

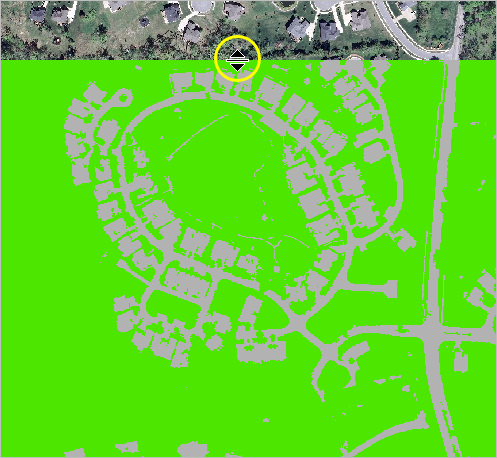

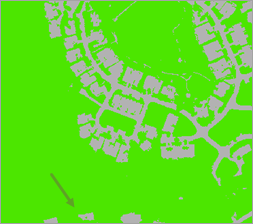

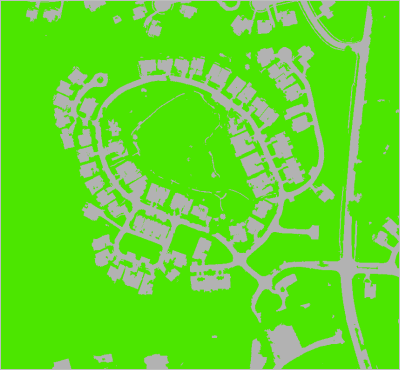

The process may take a long time, as multiple processes are run. First, the image is segmented (previously, you only segmented the image on the fly, which is not permanent). Then, the classifier is trained and the classification performed. When the process finishes, a preview of the classification is displayed on the map. The colors in the preview correspond to the colors you chose for each training sample class.

Note:

Your results depend on the training samples you drew and will differ from the example images.

At full zoom extent, your classification preview may not appear fully accurate. In the example image, for instance, most grass (light green) was classified as trees (dark green). Even more concerning, as it would affect your analysis of pervious and impervious surfaces, some roads have been classified as trees.

However, like the segmentation preview, this classification is being performed on the fly. At the full zoom extent of the data, the classification is done with less accuracy to quickly display the full dataset. When you zoom in, you'll see a more accurate classification.

- In the Contents pane, uncheck Preview_Segmented to turn it off. Zoom in and wait for the classification preview to load again.

Depending on your training samples, your classification preview now appears to be more accurate. In the example image, trees and roads are more accurately classified.

You may notice that some features are still classified incorrectly. For instance, in the example image, many shadows were incorrectly classified as water, and some roads were incorrectly classified as driveways.

Classification is not an exact science and rarely will every feature be classified perfectly. If you see only a few inaccuracies, you can correct them manually later in the wizard. If you see a large number of inaccuracies, you may need to create more training samples. Also, in this case, shadows and water are both pervious, and roads and driveways are both impervious, so these inaccuracies won't change the final classification into pervious and impervious land cover.

Note:

If, when zoomed in, you still see a large number of inaccuracies (such as roads being classified incorrectly as trees), you may want to modify your training samples. To do so, click Previous. Create additional training samples or delete the existing ones and create new training samples, then run the Train tool again.

- If you are satisfied with the classification preview, click Next.

The next page is the Classify page. You'll use this page to run the actual classification and save it in your geodatabase.

- For Output Classified Dataset, change the output name to Classified_Louisville.tif. Leave the remaining parameters unchanged and click Run.

The process runs and the classified raster layer Classified_Louisville is added to the map. It looks similar to the zoomed-in preview, even at the full extent of the data.

- In the Contents pane, turn off the Preview_Classified layer. In the Image Classification Wizard pane, click Next.

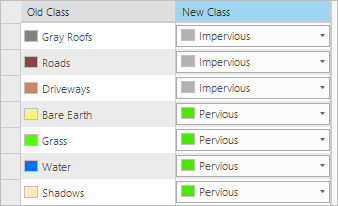

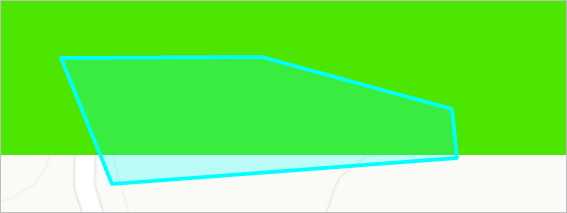

The next page is the Merge Classes page. You'll use this page to merge subclasses into their parent classes. Your raster currently has eight classes, each representing a type of land use. While these classes were essential for an accurate classification, you are only interested in whether each class is pervious or impervious. You'll merge the subclasses into the Pervious and Impervious parent classes to create a raster with only two classes.

- For each class, in the New Class column, choose either Pervious or Impervious.

On the map, a preview shows what the reclassified image will look like. When you change all of the classes, the preview should only have two classes, representing pervious and impervious surfaces.

- Click Next.

Reclassify errors

The final page of the wizard is the Reclassifier page. This page includes tools for reclassifying small errors in the raster dataset. You'll use this page to fix an incorrect classification in your raster.

- In the Contents pane, uncheck all layers except the Preview_Reclass and Louisville_Neighborhood.tif layers. Click the Preview_Reclass layer to select it.

- On the ribbon, click the Raster Layer tab. In the Compare group, click Swipe.

- Drag the pointer across the map to visually compare the preview to the original neighborhood imagery.

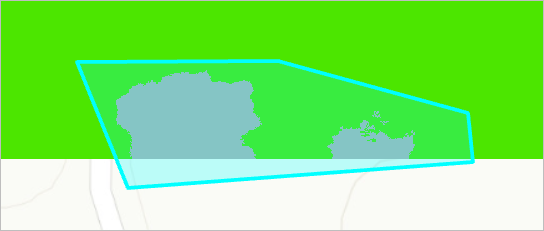

One inaccuracy that is present in the example image is that some bare earth patches south of the neighborhood were misclassified as impervious. These patches are not connected to any other impervious objects, so you can reclassify them with relative ease.

Note:

Depending on your training samples, you may encounter different errors that need to be reclassified than the ones outlined in this workflow.

- On the ribbon, click the Map tab. In the Navigate group, click the Explore button.

- Zoom to the patches of bare earth area at the southern edge of the image.

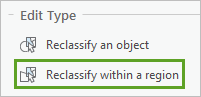

- In the wizard, click Reclassify within a region.

With this tool, you can draw a polygon on the map and reclassify everything within the polygon.

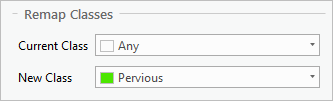

- In the Remap Classes section, confirm that Current Class is set to Any. For New Class, choose Pervious.

With these settings, any pixels in the polygon will be reclassified to pervious surfaces. Next, you'll reclassify the bare earth patches.

- Draw a polygon around the bare earth patches. Make sure you do not include any other impervious surfaces in the polygon.

The bare earth patches are automatically reclassified as a pervious surface.

Note:

If you make a mistake, you can undo the reclassification by unchecking it in the Edits Log pane.

While you likely noticed other inaccuracies in your classification, for the purposes of this tutorial, you won't make any more edits.

- Zoom to the full extent of the data.

- In the Image Classification Wizard, for Final Classified Dataset, type Louisville_Impervious.tif (including the .tif extension).

- Click Run. When the tool completes, click Finish.

The reclassified raster has been added to the map.

- In the Contents pane, turn off Preview_Reclass.

- Save the project.

Note:

There are methods to systematically quantify the level of accuracy of a classification. You can learn about this in the tutorial Assess the accuracy of a perviousness classification, where you'll randomly generate accuracy assessment points, ground truth them, create a confusion matrix, and obtain a classification accuracy percentage. The tutorial includes steps to perform the accuracy assessment for the impervious and pervious classification you just completed.

You have classified imagery of a neighborhood in Louisville to determine land cover that was pervious and land cover that was impervious. Next, you'll calculate the area of impervious surfaces per land parcel so the local government can assign storm water fees.

Calculate impervious surface area

Using the results of the classification, you'll calculate the area of impervious surface per parcel and symbolize the parcels accordingly.

Tabulate the area

To determine the area of impervious and pervious surfaces within each parcel of land in the neighborhood, you'll first calculate the area and store the results in a stand-alone table. Then, you'll join the table to the Parcels layer.

- On the ribbon, click the Analysis tab. In the Geoprocessing group, click Tools.

The Geoprocessing pane appears.

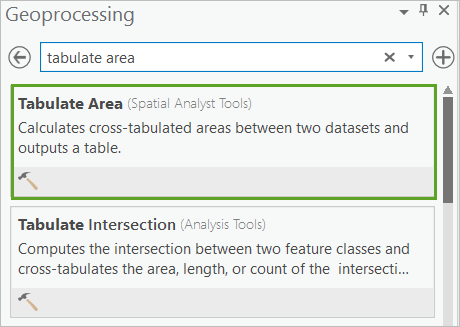

- In the Geoprocessing pane, search for the Tabulate Area tool and open it.

This tool calculates the area of some classes (in this tutorial, pervious and impervious) within specified zones (in this tutorial, each parcel).

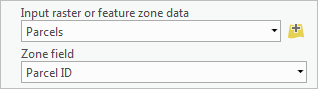

- For Input raster or feature zone data, choose the Parcels layer. Confirm that the Zone field parameter populates with the Parcel ID field.

The zone field must be an attribute that identifies each zone uniquely. The Parcel ID field has a unique identification number for each feature, so you'll leave the parameter unchanged.

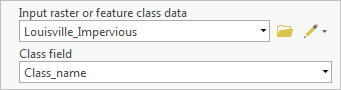

- For Input raster or feature class data, choose the Louisville_Impervious layer.

- For Class field, choose Class_name.

The class field determines the field by which area will be determined. You want to know the area of each class in your reclassified raster (pervious and impervious), so the Class_name field is appropriate.

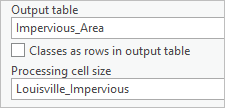

- For Output table, click the text field, confirm that the output location is the Neighborhood_Data geodatabase, and change the output name to Impervious_Area.

The final parameter, Processing cell size, determines the cell size for the area calculation. By default, the cell size is the same as the input raster layer Louisville_Impervious, which is half a foot (in this case). You'll leave this parameter unchanged.

- Click Run.

The tool runs and the table is added to the Contents pane, in the Standalone Tables section. You'll take a look at the table that you created.

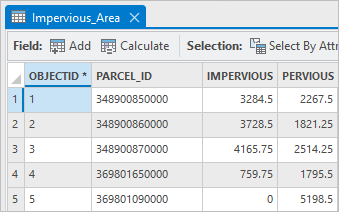

- At the bottom of the Contents pane, under Standalone Tables, right-click the Impervious_Area table and choose Open.

Note:

Depending on your training samples, your table's values may differ from those in the example image.

The table has a standard ObjectID field, as well as three other fields. The first is the Parcel_ID field from the Parcels layer, showing the unique identification number for each parcel. The next two are the class fields from the Louisville_Impervious raster layer. The Impervious field shows the area (in square feet) of impervious surfaces per parcel, while the Pervious field shows the area of pervious surfaces.

- Close the table.

You now have the area of impervious surfaces per parcel, but only in a stand-alone table. Next, you'll join the stand-alone table to the Parcels layer so that the area information becomes available in the layer. You'll perform the join based on the Parcel ID field, which is common to the Parcel layer and the stand-alone table.

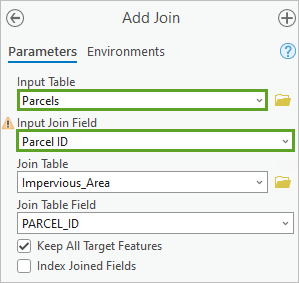

- In the Contents pane, right-click the Parcels layer, point to Join and Relates, and choose Add Join.

The Add Join window appears.

- In the Add Join window, set the following parameters:

- For Input Table, confirm that Parcels is selected.

- For Input Field, choose Parcel ID.

- For Join Table, confirm that Impervious_Area is selected.

- For Join Field, confirm Parcel_ID is selected.

Note:

You can ignore the warning appearing next to Input Join Field. The number of features in the Parcels layer is not very large, so it is not an issue that the Parcel ID field not indexed.

- Accept the default values for the other parameters and click OK.

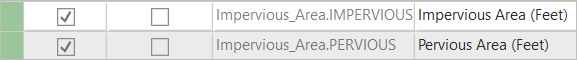

- In the Contents pane, right-click the Parcels layer and choose Attribute Table. In the attribute table, confirm that the attribute table now includes the following fields:

- IMPERVIOUS

- PERVIOUS

Clean up the attribute table

Now that the tables have been joined, you'll change the field aliases to be more informative.

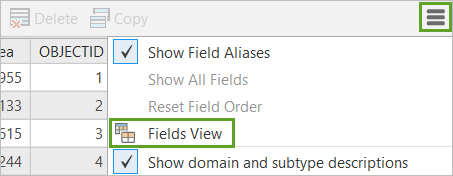

- In the Parcels attribute table, click the options button and choose Fields View.

The Fields view for the Parcels attribute table appears.

With the Fields view, you can add or delete fields, as well as rename them, change their aliases, or adjust other settings. You'll change the field aliases of the two area fields to be more informative.

- In the Alias column, change the alias of the Impervious field to Impervious Area (sq ft). Change the alias of the Pervious field to Pervious Area (sq ft).

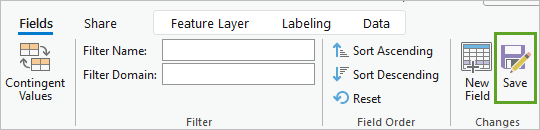

- On the ribbon, on the Fields tab, in the Manage Edits group, click Save.

The changes to the attribute table are saved.

- Close the Fields view and close the attribute table.

Symbolize the parcels

Now that you have impervious area values assigned to each parcel, you'll symbolize the parcels to visually compare the parcels by impervious area.

- In the Contents pane, turn on the Parcels layer. Turn off the Louisville_Impervious layer.

- Right-click Parcels and choose Symbology.

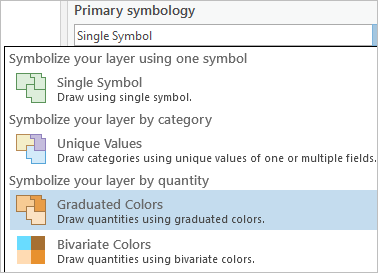

The Symbology pane for the Parcels layer appears. Currently, the layer is symbolized with a single symbol, a yellow outline. You'll symbolize the layer so that parcels with high areas of impervious surfaces appear differently than those with low areas.

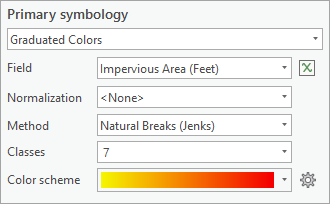

- In the Symbology pane, for Primary symbology, choose Graduated Colors.

A series of parameters becomes available. First, you'll change the field that determines the symbology.

- For Field, choose Impervious Area (sq ft).

The symbology on the layer changes automatically. However, there is little variety between the symbology of the parcels because of the low number of classes.

- Change Classes to 7 and change Color scheme to Yellow to Red.

Tip:

To see a color scheme's name, point to it in the list of color schemes.

The layer symbology changes again.

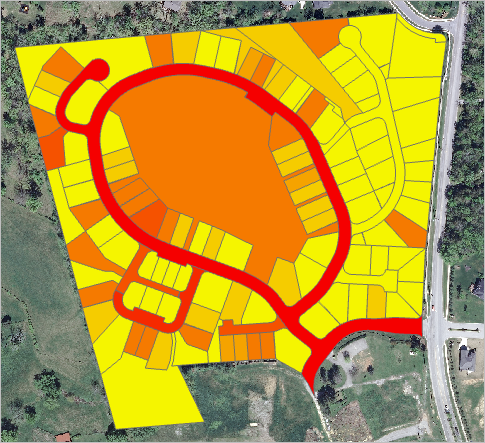

To interpret this map correctly, remember that the symbology chosen indicates the total impervious area for each parcel, and not the percentage of impervious surface. The parcels with the largest area of impervious surfaces appear to correspond to roads. These parcels are very large and almost entirely impervious. The large parcel with the pond and lawn in the center is symbolized in orange indicating a somewhat high impervious surface. This is due to the fact that the impervious path around the pond is thin but long and accumulates a sizable area. There is a great variation among the smaller parcels, based on the size of the buildings, driveways, and terraces they contain. In the future, owners could decrease the impervious surface in their parcels by replacing an impervious driveway or terrace with pervious ones or perhaps installing a green roof on their house.

Note:

You could choose to symbolize the layer by the percentage of impervious area, instead of the total impervious area. However, most storm water fees are based on the total impervious area, making the current symbology more appropriate for the use case.

- Close the Symbology pane.

- Save the project.

In this tutorial, you classified an aerial image of a neighborhood in Louisville, Kentucky, to show areas that were pervious and impervious to water. You then assessed the accuracy of your classification and determined the area of impervious surfaces per land parcel. With the information that you derived in this tutorial, the local government would be better equipped to determine storm water bills.

You can use this workflow with your own data. As long as you have high-resolution, multispectral imagery of an area, you can classify its surfaces.

Note:

To go further, consider doing the tutorial Assess the accuracy of a perviousness classification. Building on the results you just obtained, you'll learn how to formally assess the accuracy of your classification. This is an important step to prove the reliability of your results.

You can find more tutorials like this on the Introduction to Imagery & Remote Sensing page.

You can find more tutorials in the tutorial gallery.